The movie Ghost in the Shell came out a full 6 years after Masamune Shirow’s cyberpunk manga “Mobile Armored Riot Police” was serialized, and preceded the two anime television series Ghost in the Shell: Stand Alone Complex, and Ghost in the Shell: S.A.C. 2nd GIG. The entire franchise is massive and massively influential. It’s fair enough to say that this film might be the worst in the series to evaluate, but I have to start somewhere, and the start is the most logical place for this.

Sci: B+ (3 of 4) How believable are the interfaces?

The main answer to this question has to do with whether you believe that artificial intelligence and its related concept machine sentience is possible. Several concepts rely on that given.

- Headrest support (for targeting Kunasagi’s neck)

- Tera-keyboard (for interpreting the mind-blowing amount of input)

- Siege Support (if you don’t like the human interpretation)

The main thing that’s frustrating in the film is that this artificial intelligence is not brought to bear in ways that seem obvious.

- Section 9’s crappy security

- Section 6’s crappy sniper tech (though to be fair, this might be a legal thing)

There are even a few interfaces that seem bad, useless, or impossible to apologize for:

These bad interfaces are minor exceptions, and there are reasons to debate why they’re not actually broken, but some artifact of the culture. All in all, not bad for a movie almost two decades old.

Fi: A (4 of 4)

How well do the interfaces inform the narrative of the story?

Technology isn’t window dressing for Ghost in the Shell. It is central to the plot, and interntally consistent in all the ways that matter.

- Ghost hacking by public terminal tells of a society whose technology is fundamentally embedded in its infrastructure

- The crappy security tells of heroes being savvy and heroic, and while perhaps a little overexplanatory, telling

- The spider tank’s vision speaks of a terrifying inhuman intelligence

- The tera-keyboard tells us how far cybernetics have come, and how technology is fundamentally embedded in human bodies.

Interfaces: A- (4 of 4)

How well do the interfaces equip the characters to achieve their goals?

All of them seem to, and some of them do so with a degree of savviness and sophistication.

- Perpvision’s squint gestures let Kunasagi remain motionless while investigating her surroundings

- Thermoptic camouflage lets its users make simple, synechdochic gestures to become quickly hidden

- The tera-keyboard is the most powerful tool for capturing thought as its happening I’ve come across

The few that drag it down are:

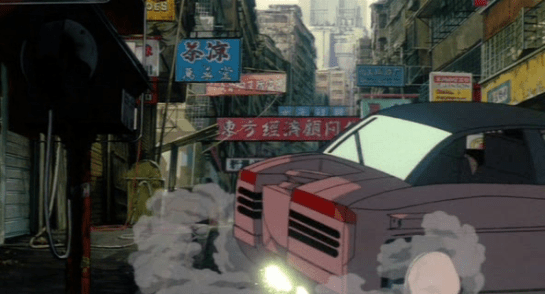

- Notable getaway cars

- A brain VP that is a static picture of the surface

- A visualization that obscures much of the context and useful information

These are more narrative than useful, and prevent me from awarding full marks.

Final Grade A- (11 of 12), BLOCKBUSTER

Related lessons from the book

- The synechdochic gestures in the thermoptic camouflage Builds on what users already know (page 19)

- The heavily layered, transparent green profiles seen in the movie fail to Avoid the confusion caused by too many overlapping, transparent layers (page 54)

- The cyberneticist’s brain VP adheres to most of the Pepper’s ghost style (page 81)

- The neck jacks certainly Let the user relax the body for brain procedures (page 135).

- The scanner virtual reality in fact Deviates from the real world with caution (page 145)

- The tera-keyboard doesn’t quite Visualize brain-reading interfaces (page 154) from the output side, but it certainly answers the opportunity from the input side.

- Chief Aramaki knows too well that The human is sometimes the ideal interface (page 205)

New lessons

- The scanners should Distribute colors for readable contrasts.

- The notion of squint gestures and synechdochic gestures suggests a tweak to the lesson on page 116, to make a new lesson I might call Put actuators in fitting parts.

- Squint gestures also imply Social gestures should be subtle.

- The pair of crappy interfaces remind us that Agentive interfaces should handle the mundane tasks.

To be honest, I would not have counted myself a fan of the movie before this review, even though I’d been to see it in the cinema on its release. After the review, though, I’m thoroughly impressed by the subtlety and richness of its interfaces. I’m now even curious to see the television show since fans say it’s even better.